Students from a different country that are living abroad and want to be reminded of the sensorial experience (visual, sound, smell) of the weather in their countries. Students need some ways to connect themselves with their homes and families that are far away from them.

Outcome

In this part of the ecosystem, our goal is for students to feel that they are connected to their hometown’s environment by their familiar sounds that they used to hear all the time back home. Sound can be expressed non-intrusively and are embedded with valuable memories. Our goal is to design an ambient device that integrates aesthetic looking and comforting sounds to "bring" students back to their homes.

Concept Description:

A house shaped device that contains a mini speaker that can play nature sounds according to temperature data. Thus we need weather web hooks to tell the weather (Sunny, Cloudy, Clear sky, Rainy) to the photon and trigger different sounds scenario. For example, when it is a sunny day at the student’s hometown, the speaker will play the pre-recorded audio: Sparrow chirps; when it is rainy, the student will hear the sound of toads/frogs croaks and when it is during winter, the sound of crickets will be heard by the user.

Brainstorming:

After talking to the other two teams in @home, we started brainstorming on how we can convey sounds and make our user feel that he is at home with familiar sounds. With the other two teams, we brainstormed what message we would be utilizing to create an environment that the user is familiar with. As we interviewed Manchit, our advisor, expressed how much he missed the weather in Mumbai when being here at Pittsburgh. During the snowing days, Manchit always misses how nice the weather was in Mumbai. With the two other teams, we decided to use weather data in Mumbai to bring our user to home. As the weather in Mumbai changes, the sight team will project different icons on the wall; the smell team will diffuse different smells according to weather as well. It is more straightforward for us, the sound team to reveal different weathers based on the sound of, for example, the rain. We wanted to incorporate not only the sound of rain or wind, but also the sound of animals such as crows and crickets to further picturing home away from home. We then prepared seven audios with different combinations that indicating season and weather so that they can correspond to real-time data.

Prototype:

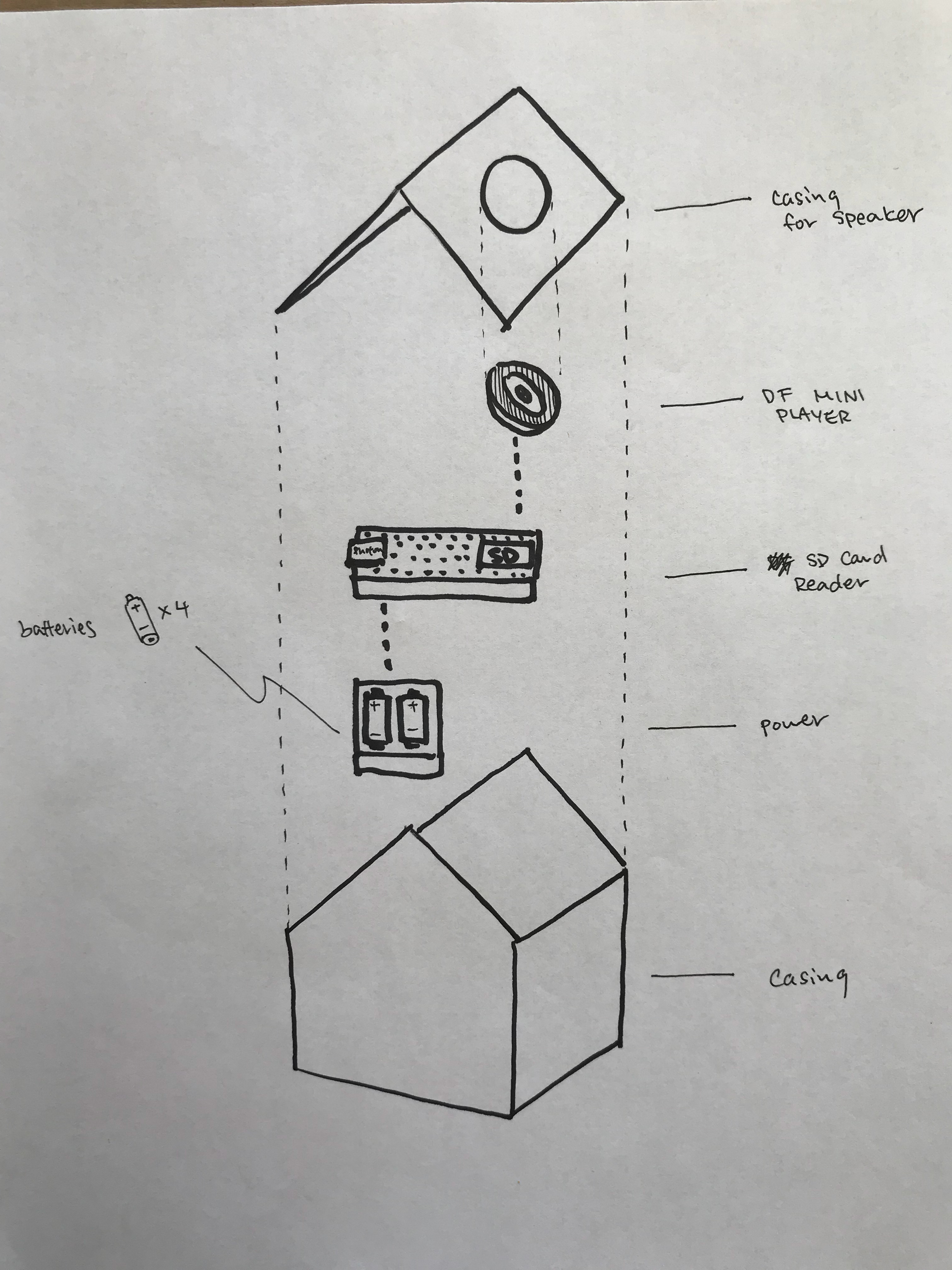

For the physical prototype, we first came up with the idea of a box with a shape of house and figured that it will be done by laser cutting. Then we thought about the idea of putting the skyline of Mumbai into the prototype. By tracing down a picture that has the shape of skyline for it, we were able to laser cut a house with Mumbai’s skyline on it. We also decided to turn the rooftop of the house into a modular piece that can be altered according to users’ needs.

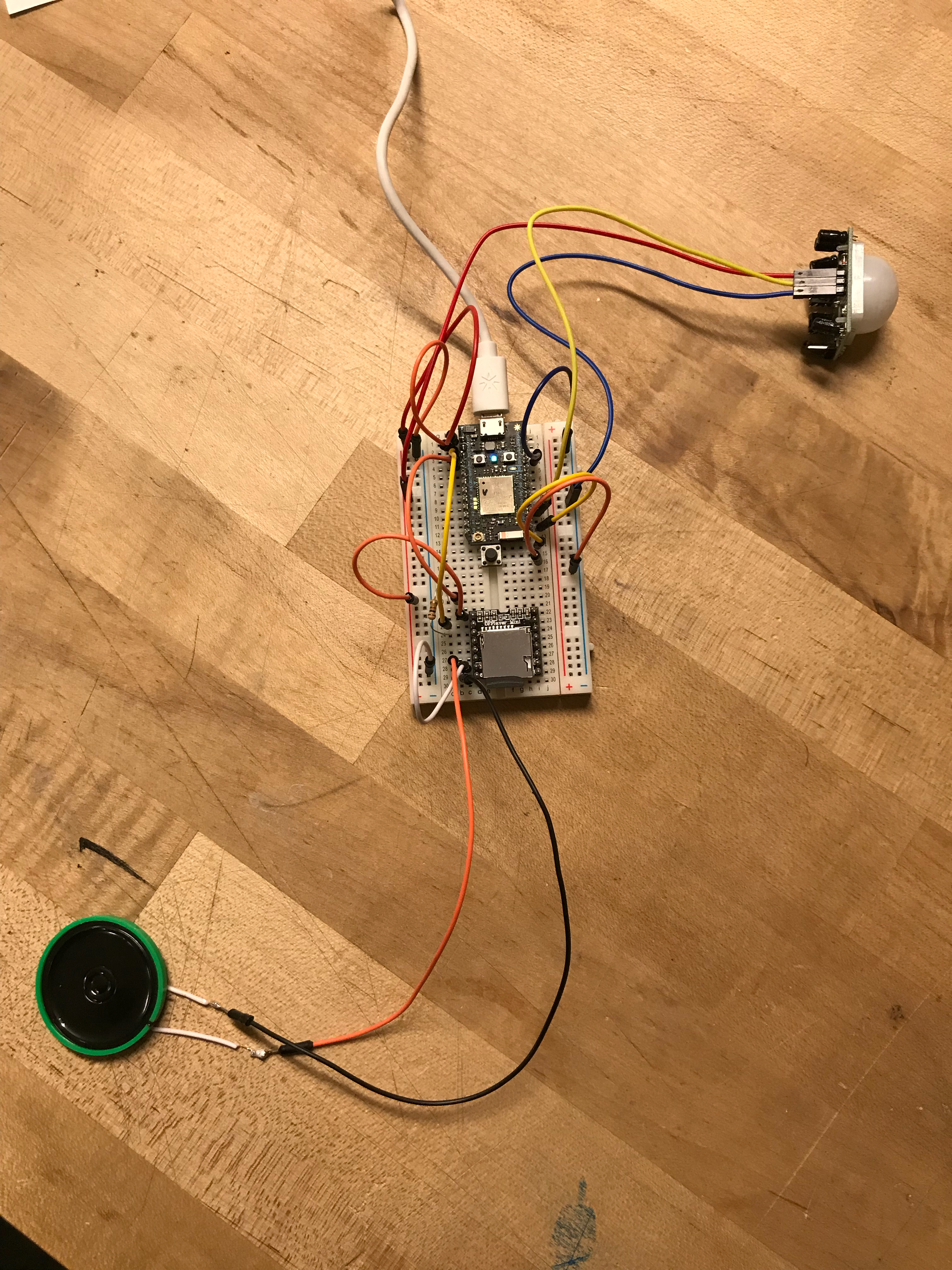

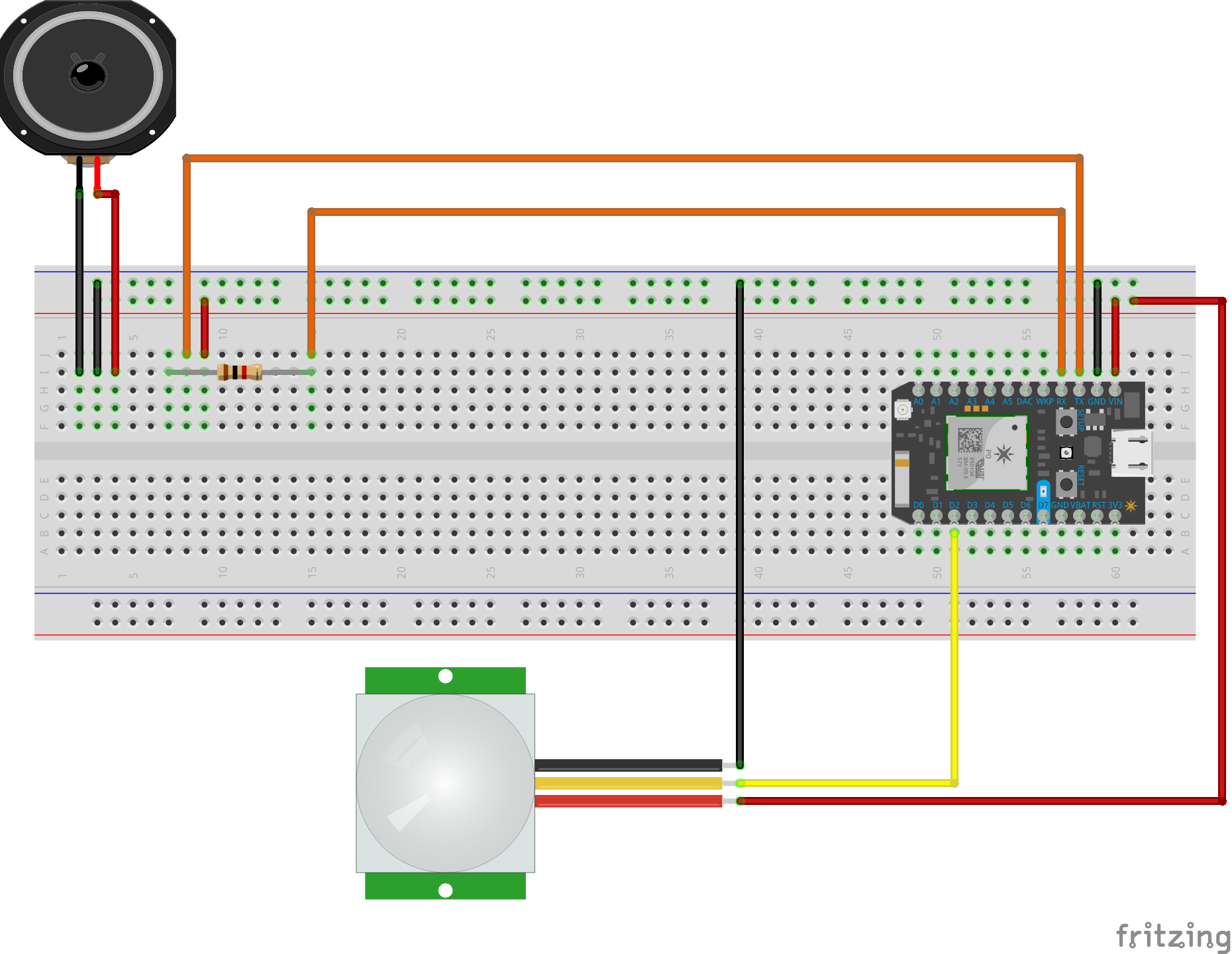

For the coding prototype, we first tried to implement a button to turn on/off the speaker. We figured that “sound of home” should not be turned on if no one is in the room, so a motion sensor was attached to the system so that the system will only function when it senses movements. We utilized web hooks, reading the data about the chance of precipitation with its intensity from API to trigger different motions for the player to play different audios accordingly. This was done individually by the three objects in team @home. But after getting feedbacks, we thought that it would be intuitive to let the three objects talk to each other than working separately. We then updated the codes that the “sound of home” will publish events that the other two objects can subscribe and change their outputs accordingly.

The final outcome of both the coding part and physical prototype came out as we expected. The physical prototype ended up with a house shaped wood box with a dimension of 6 in x 6 in with a height of 5 in. A modular triangle shaped rooftop was also created and implemented with cut-outs spaces for speaker and motion sensor. The sound team is now the only team connecting to weather data through web hooks and sends out events for the other two objects to subscribe and act accordingly. The following is a summary of the bill of materials, circuits diagram and iterated codes use during the process:

The process of making this project is memorable. We learned that aligning on a goal and direction for approaching problem is important. During the beginning part of the project, the ten of us met a few times as a big team to brainstorm and agreed on use cases. By doing so, we found that the amount of double-work was less if we keep conversation open not only within the small group but also with the big group. As a result, both the coding and physical prototypes turned out to be what we expected as a big group.

Something we learned:

- Use of mini player and mini speaker

- Leveraging a motion sensor into the system as a switch

- Use of Web hooks technology so that we can get data from an API

- How to connect other devices in order to make a holistic IoT system

Challenges we faced:

- When we started implementing mini player, we could not figure out why no audio was playing when there is no error on the codes or the circuits. We then went to the internet and looking for solution, we finally found that there is hidden file which is also the first file in the sd card that prevents us from playing the right audio. We then tried to avoid this by using a PC when store audios in sd card.

- When we were trying to utilize weather data, we faced so many information that could be used and had no idea how we can only use some of them. We then group them into different groups, for example, grouping “wind” and “tornado” in to the same group so that it is easier to navigate the weather data.

Future Iterations:

- Implementing more audios such as familiar songs that are played during festival and certain songs for family members when their birthdays approaches

- A two-way communication between user and their familiar, for instance, when weather changes and the three objects respond to them, a text is sent to user’s family saying something like “Hey mom, it's getting cold, be warm!”

You can upload files of up to 20MB using this form.