Intention

When I typed "epidemic of" on a Google search bar, one of the first suggestion to appear was the word "loneliness". For research purposes only, I clicked on that suggestion and watched the search engine locate about 2,640,000 results for me, under 0.5 seconds. The first dozen results that appeared on my screen were fairly recent. They consisted of links directing me to articles published by The Atlantic, The Economist, The New York Times and The Washington Post published in the last couple of years.

Glancing at the mentioned texts, it seems to me that the technology of today; which is capable of connecting millions of electronic gadgets to each other, isn't quite able to provide us with a feeling of connection and companionship. The train of thoughts that started with me typing in "epidemic of" to a search bar went into hyperspeed as I was conducting my field study at a close friends house. He is an international student and lives alone, just like me.

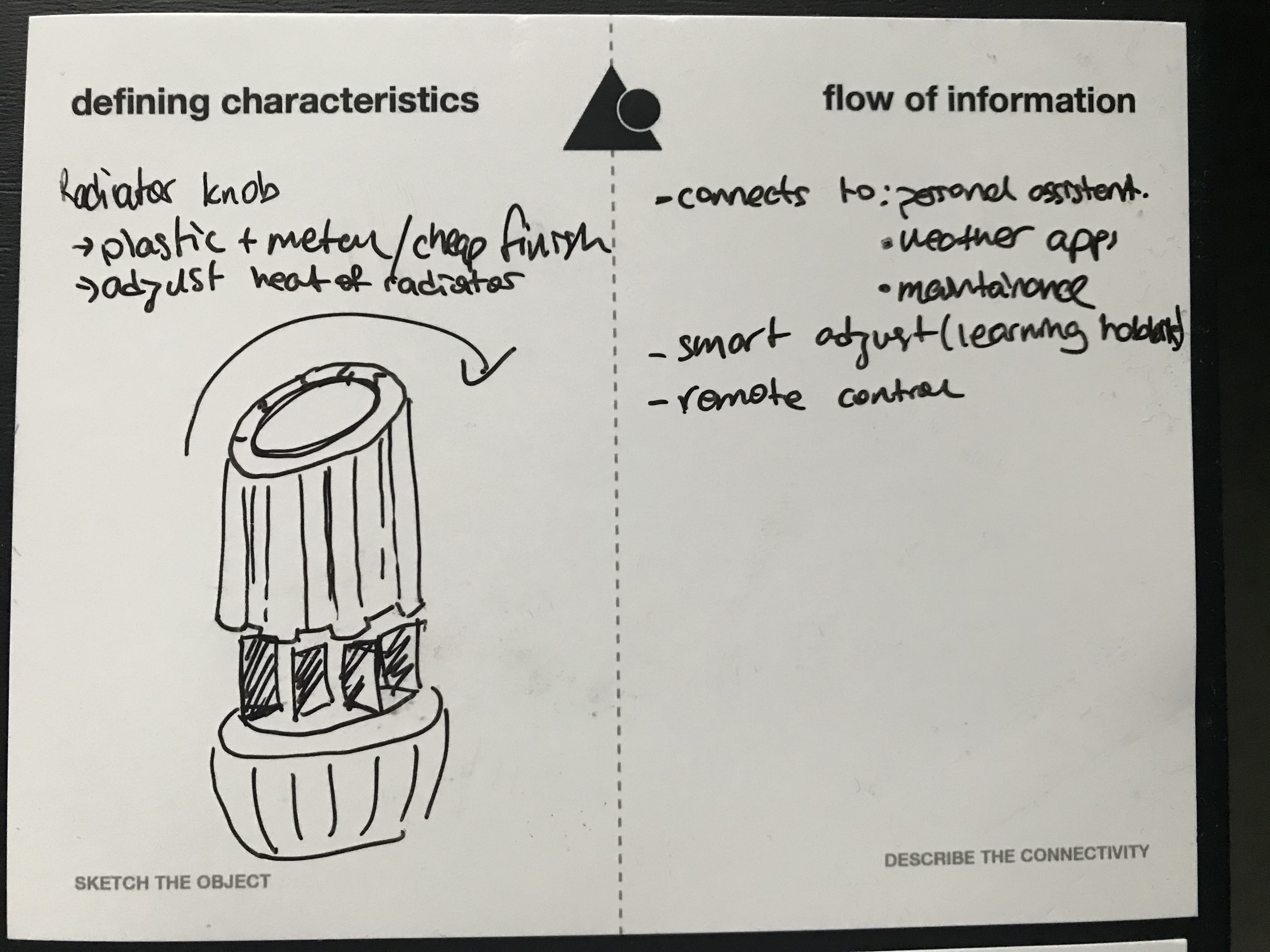

During the interview, we went through a lot of the objects that were located in the house: the Google Home Mini, the smart plugs, the microwave, the sofa, the radiator knob, the steam cooker... Interestingly, all his favorite gizmos had the same set of features! They offered efficiency and productivity. They were totally replaceable if they broke. On the other hand, none of these items offered a connection on an emotional level. He only mentioned one or two objects that can unfold sentiments or memories.

I was trying to find the reason why it was so hard for him to locate a personally valuable object in the house and then it hit me. As international students moving into a distant country, we can only bring our most necessary belongings with us. We only buy the most necessary gadgets, to keep the budget afloat. He couldn't name emotional objects because they weren't there in the first place. Candidates for the job are probably neatly packed in a cardboard box, sitting in an old storage room miles away. So, we don't have any objects that connect us to the house and we need to build our homes from scratch.

Coming back to the interview, our co-designed answer to this emotional need arising from our house full of dispassionate objects was cohabiting the space with another living creature; a dog, a cat, a tortoise or a plant. Combining all these ideas and reminding myself of the recommendation that my mother made about turning my house into a home, I decided to direct my attention to plants.

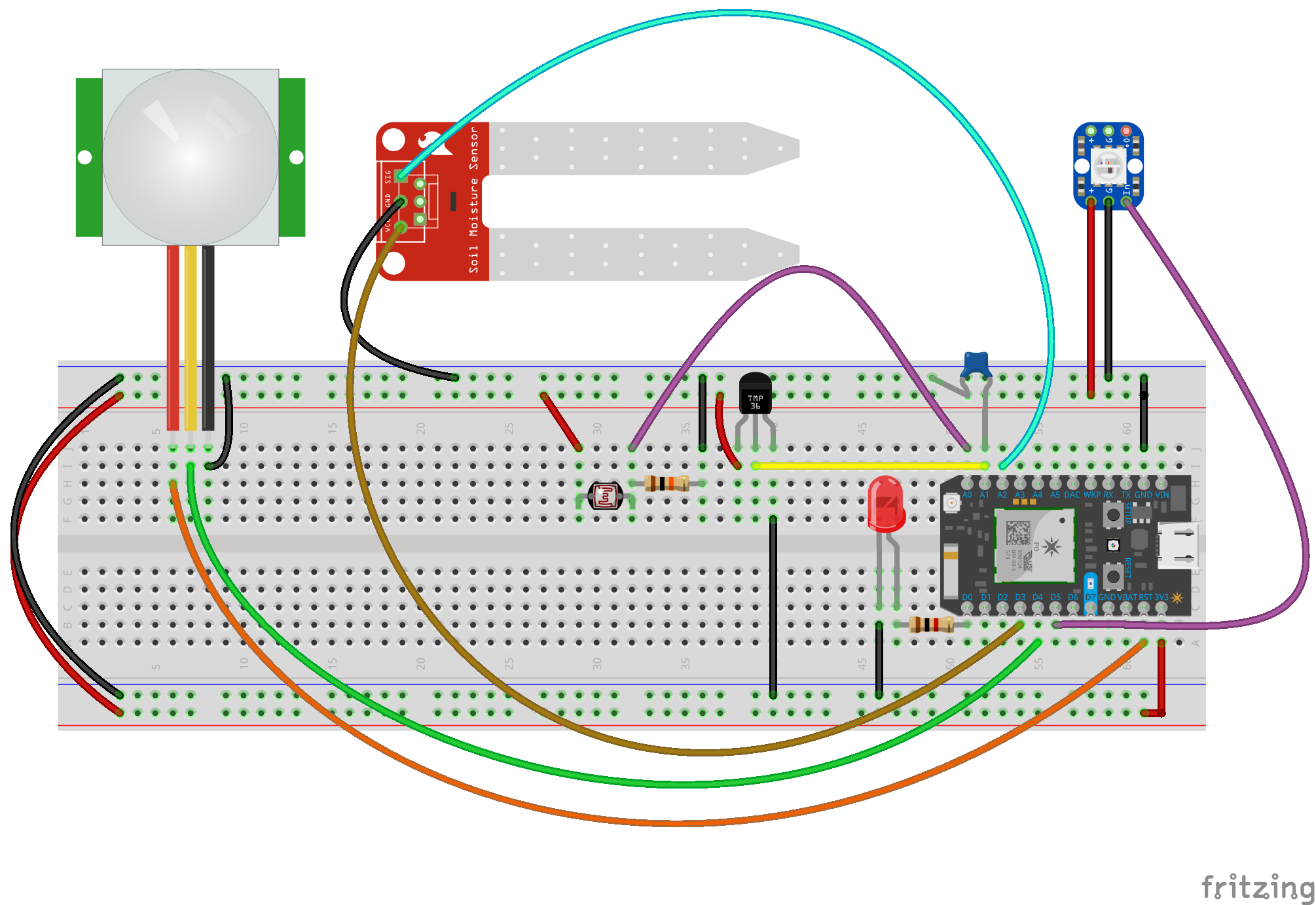

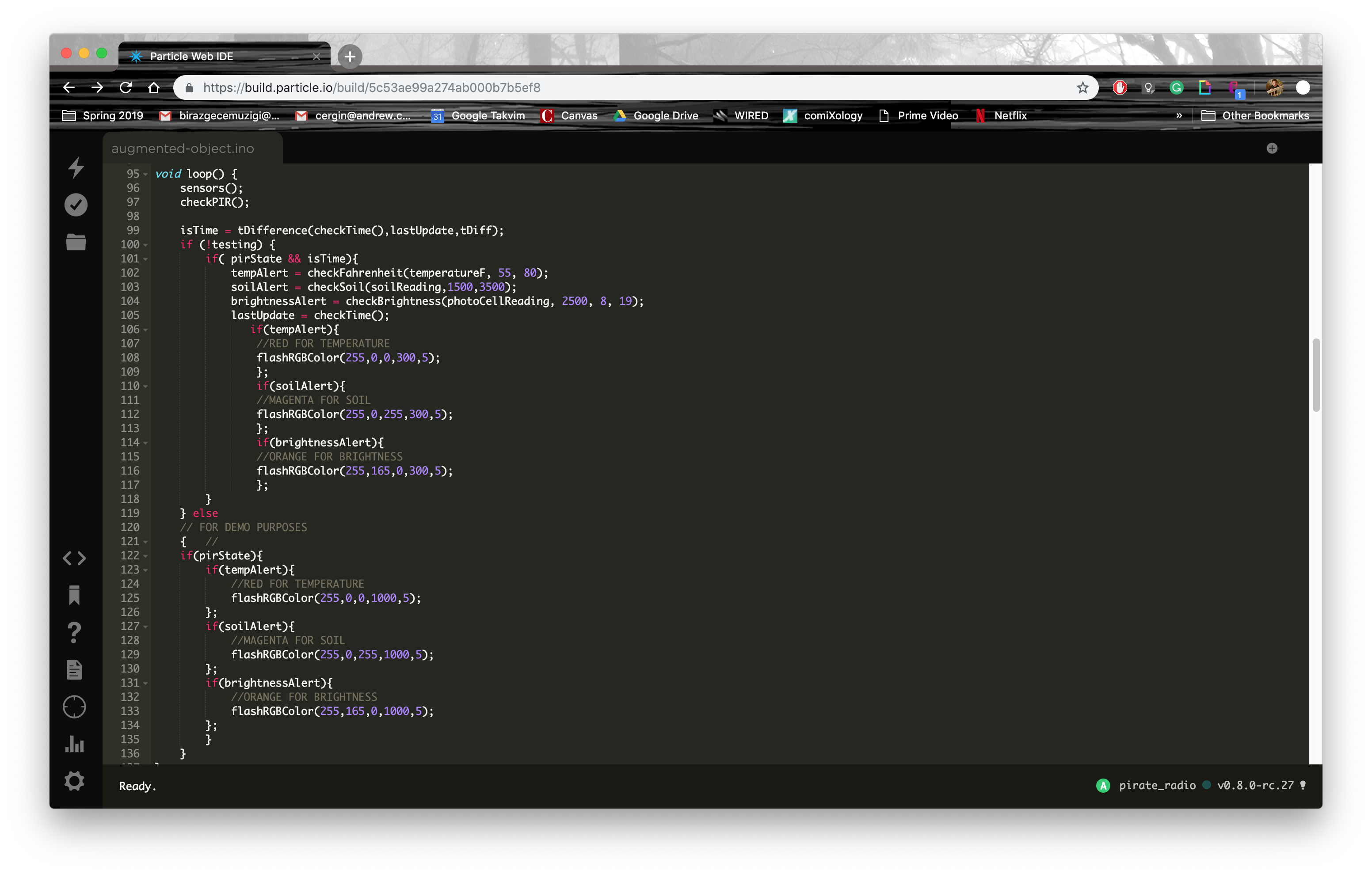

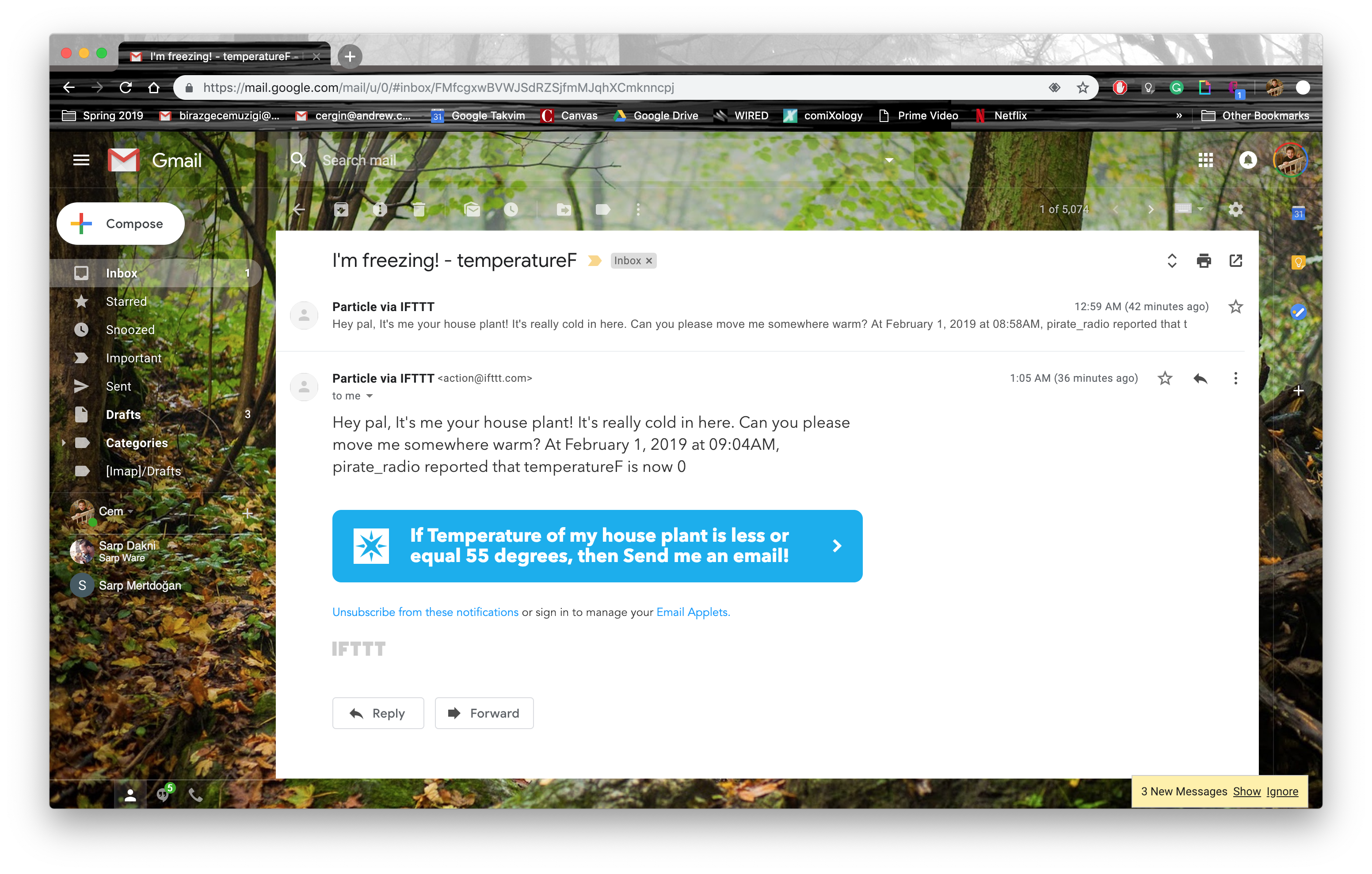

My intention was to investigate ways of enchanting our interactions with houseplants.